The IT Industry’s Ethical AI Dilemma: Is Building Ethical AI Feels Like an Impossible Task

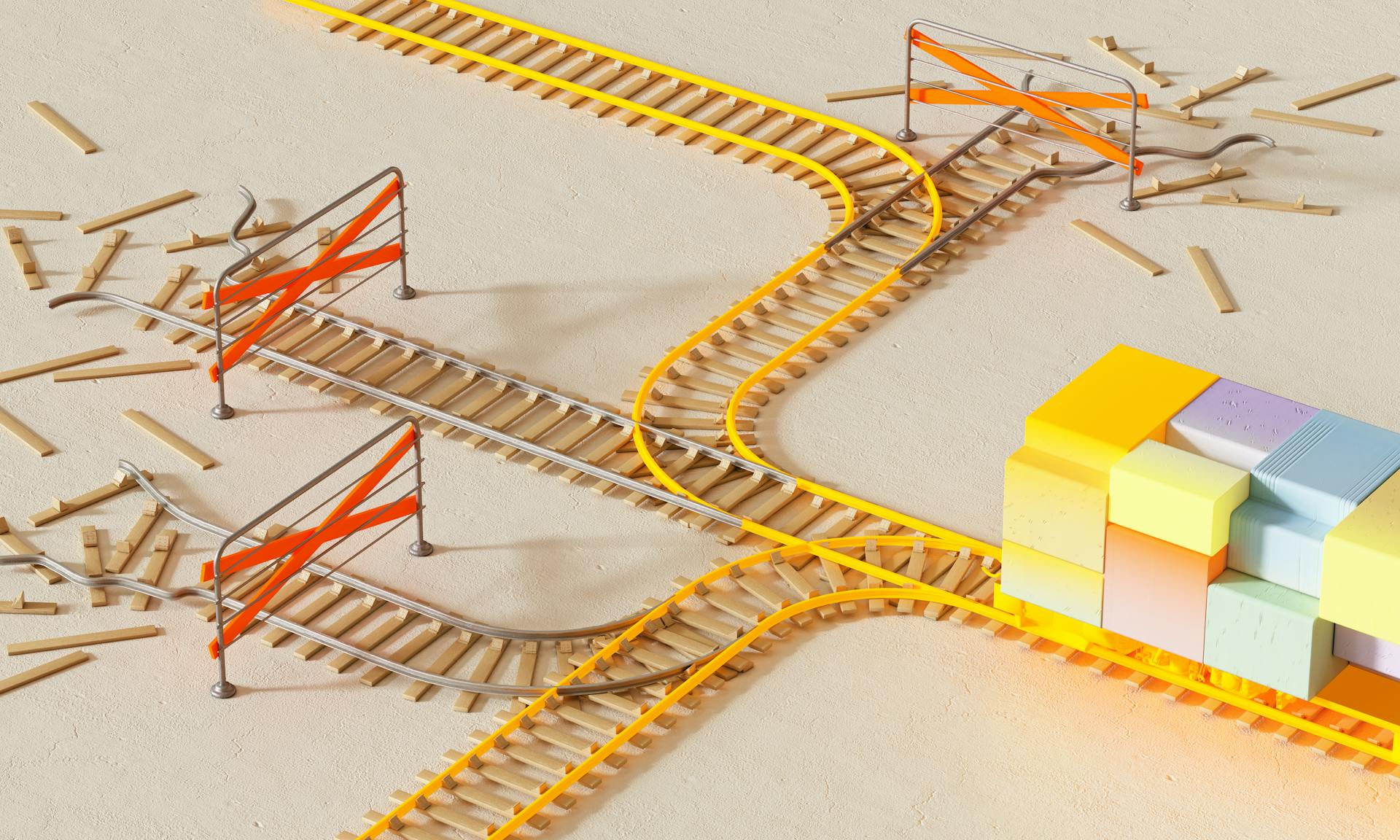

Hundreds of international companies are currently trying to create new models of artificial intelligence (AI) to solve current problems and optimize processes. Such competitions can create additional risks for ordinary people related to bias, abuse, and privacy violations. As AI takes deep root in global industry, it brings with it a dilemma: can we really create an ethical AI, or is it an impossible task?

Ethical AI?

Unlike regulated areas such as law or finance, where the general principles are more clear, the ethics of AI can vary greatly depending on societal attitudes, legal regulation, and the areas of its use, for example:

- Should AI systems in medicine be subject to stricter ethical scrutiny?

- Is it right for a judge to pass a sentence and ask a neural network for a decision?

- Can companies get your preferences and impose products?

These issues are superficial, and there are others related to education, finance, work with children, retail, defense, etc. At the same time, what is ethical in one region or sector may be unethical in another. AI models are only as objective as the quality of the data they are trained on. At the same time, recent studies have pointed to possible inequalities based on racial background, for example, in the identification of users for employment, lending, or even law enforcement. This creates a need for adaptive, context-sensitive AI systems that can respect different ethical frameworks. However, such flexibility can be difficult to encode into complex algorithms that often operate within rigid frameworks. This diversity means that creating universal ethical AI requires quick solutions.

Privacy and data ownership

Many modern AI models rely on the amount of information provided by users, which increases the ability to analyze, train, and adapt AI models. At the same time, few people think about the fact that what is being processed is actually personal data of users. This may include various data on work, education, life, and other key areas of life of people who seek AI assistance.

According to recent studies, few owners of such systems think about the correctness of the data they provide. At the same time, even fewer users read information about how their personal data is processed. As AI gains more and more capacity to process more complex data, questions arise: who really owns the data or insights it produces? The balance between AI’s need for data and human privacy rights is one of the most serious obstacles to the development of responsible AI.

Legislative regulation

A clear legal framework is one of the most important aspects of the ethical development of AI. Legislative regulation can be aimed at:

- Setting standards for AI accountability and transparency by requiring companies to disclose how their algorithms make decisions;

- Preventing discrimination so that AI algorithms cannot have built-in biases or otherwise lead to restrictions on certain social groups;

- Defining responsibility for negative consequences of work and establishing mandatory safety testing, especially in critical areas of activity;

- Protecting privacy and data, for example, by restricting the collection and use of certain sensitive user data.

But a clear line needs to be drawn here. While such restrictions are intended to increase trust in the use of AI and prevent potential consequences, they can also create problems for innovation.

Strict regulation can impede rapid progress, especially for small startups that may simply lack the resources to comply with an extensive legal framework. Thus, the balance between regulation and innovation is a delicate one, and the wrong steps could slow down AI’s potential to make a positive contribution to society.

Need for cooperation

Given these challenges, many argue that ethical AI development is best achieved through collaboration between different stakeholders, including developers, policy makers, ethicists, and end users. Incorporating different perspectives into development and governance can create more holistic, inclusive approaches to the ethical use of different AI models for different populations.

Initiatives like AiEi have emerged as a way for organizations to assess the moral implications of AI projects before they are deployed. Achieving ethical AI is not impossible, but it requires sustained effort, transparency, and a willingness to adapt. While the goal of fully ethical AI may remain elusive, the path to it is crucial and offers valuable insights into the broader ethical landscape in technology.

Ethical AI is not something that can be achieved with a single algorithm or framework; it is a moving target that evolves with technology and societal expectations. For ethical AI to succeed, it must be viewed as a process that involves ongoing dialogue, adaptive policies, and an unwavering commitment to user rights and social justice. It is a challenging task involving trade-offs, but with perseverance, collaboration, and thoughtful regulation, we may find that ethical AI is not completely out of reach.

What needs to be changed

To address ethical issues in AI development, the industry needs standardized ethical certification and robust accountability practices. Industry-wide certification could establish clear, binding guidelines for AI development and deployment, helping organizations integrate ethics from the ground up. This would involve auditing systems for fairness, transparency, and accountability throughout their lifecycle, reducing the “fix it later” approach that is currently widely used.

Establishing clear mechanisms for protecting against algorithmic discrimination, emphasizing user transparency and the need for human oversight, with a roadmap for addressing risks such as data bias and privacy concerns, guiding companies to use AI responsibly.

Such solutions can form the basis for a more formalized certification that will ensure compliance with ethical standards in various industries. This will make it impossible for companies to ignore ethical norms, as it will require documented assessments and compliance with ethical standards before an AI product enters the market. This will ultimately strengthen public trust in AI by ensuring that innovation does not come at the expense of human rights or social justice.

AI Horizon Conference

The AI Horizon Conference brought together entrepreneurs, investors and industry leaders in Lisbon to discuss key trends and shape the future of AI.

Bern

Bern

Lisbon

Lisbon

New York

New York